Demonstrating ubiquitous understanding of human motion and emotion.

The sensing, interpreting, and designing of movement for interacting with computing systems could allow machines greater capacity to interpret the actions of users to decipher user intention, as well as to communicate personalized, nuanced messages to meet individualized user needs, emulating conversation between humans. Harnessing the power of human movement as a medium for communication in the context of technology would have applications in the design of more sensitive assistive technologies, more perceptive smart homes, and more socially capable robots and conversational virtual characters. In order to realize this vision, we must improve our understanding of how humans imbue and extract meaning in and from body movement so that we can teach computers to do the same.

Traditional linguistic and cognitive science approaches to interpreting meaning from movement tend to consider shapes of specific, culturally defined gestures, timing of gestures with speech, and spatial referencing of deictic gestures (pointing). Interfaces between humans and computers echo this reasoning with one-to-one associations of mechanically specific movements and deictic gestures dominating the design of movement-based interactions. Execution of such gestures, even when they are intuitive, must be performed intentionally, but we can see from the literature in the cognitive sciences that non-verbal communication and interpretation are likely performed at non-conscious levels of processing. In order to capture emotional state and intention communicated non-consciously through movement, I suggest we look to methodology from the field of dance.

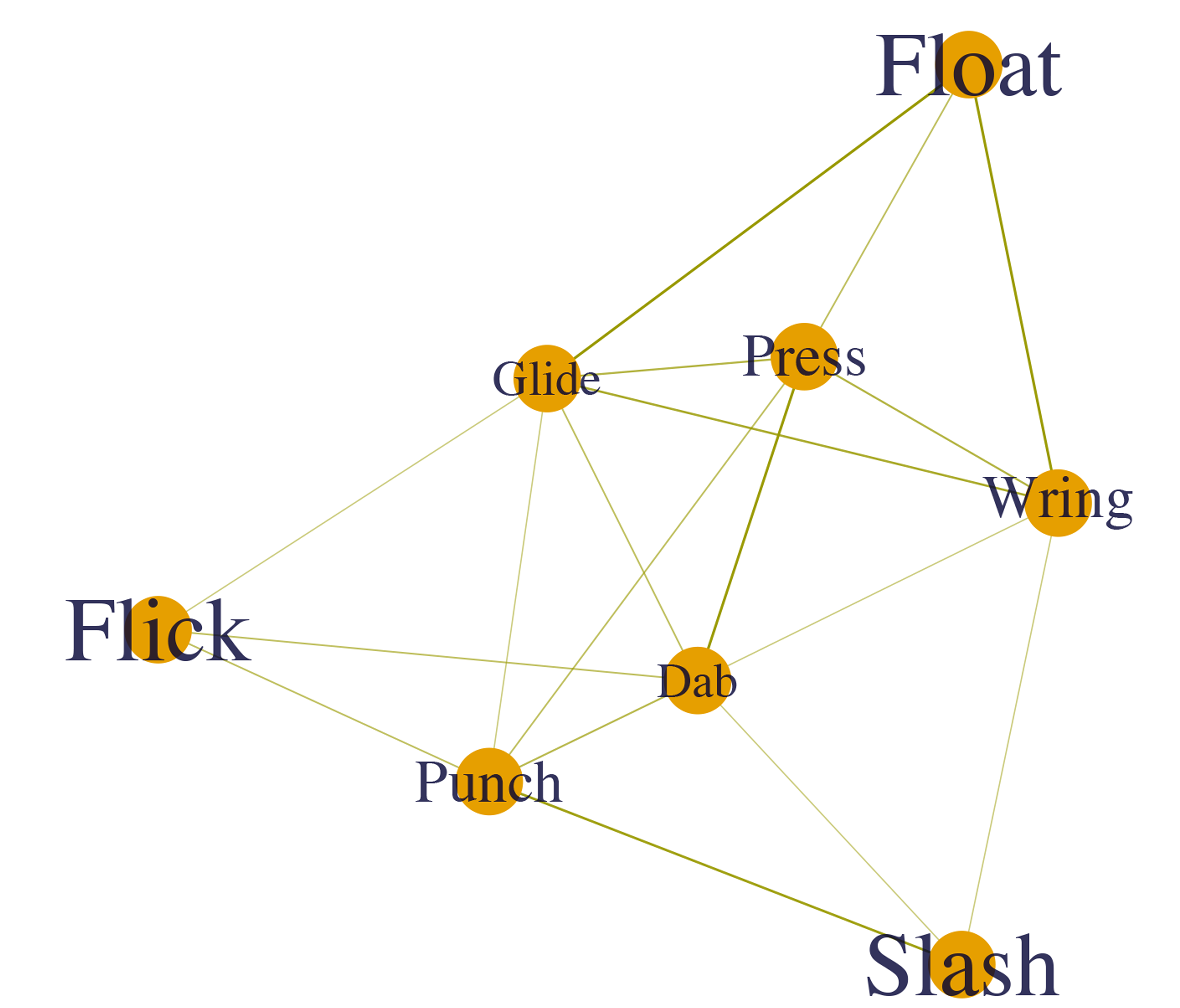

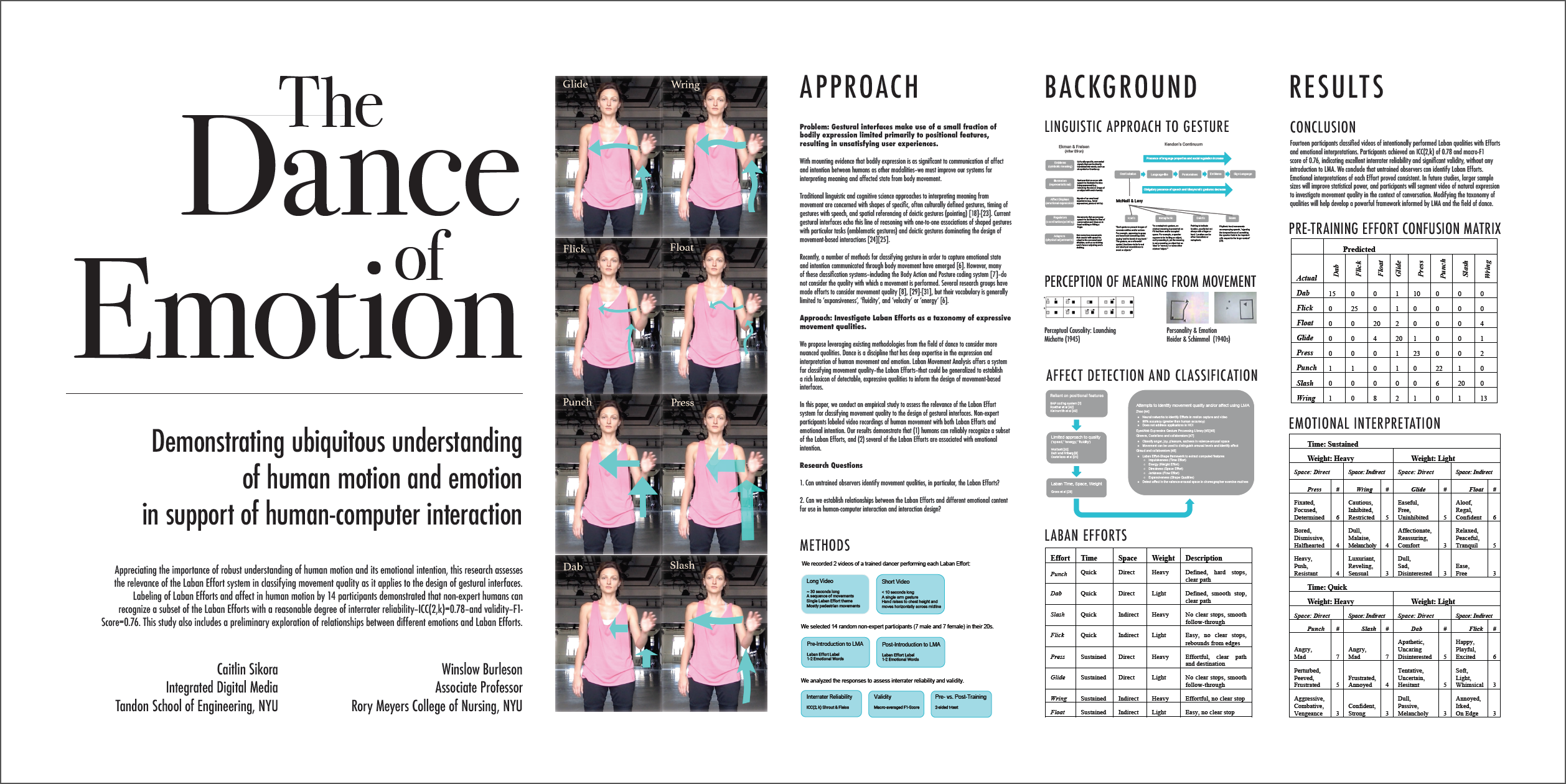

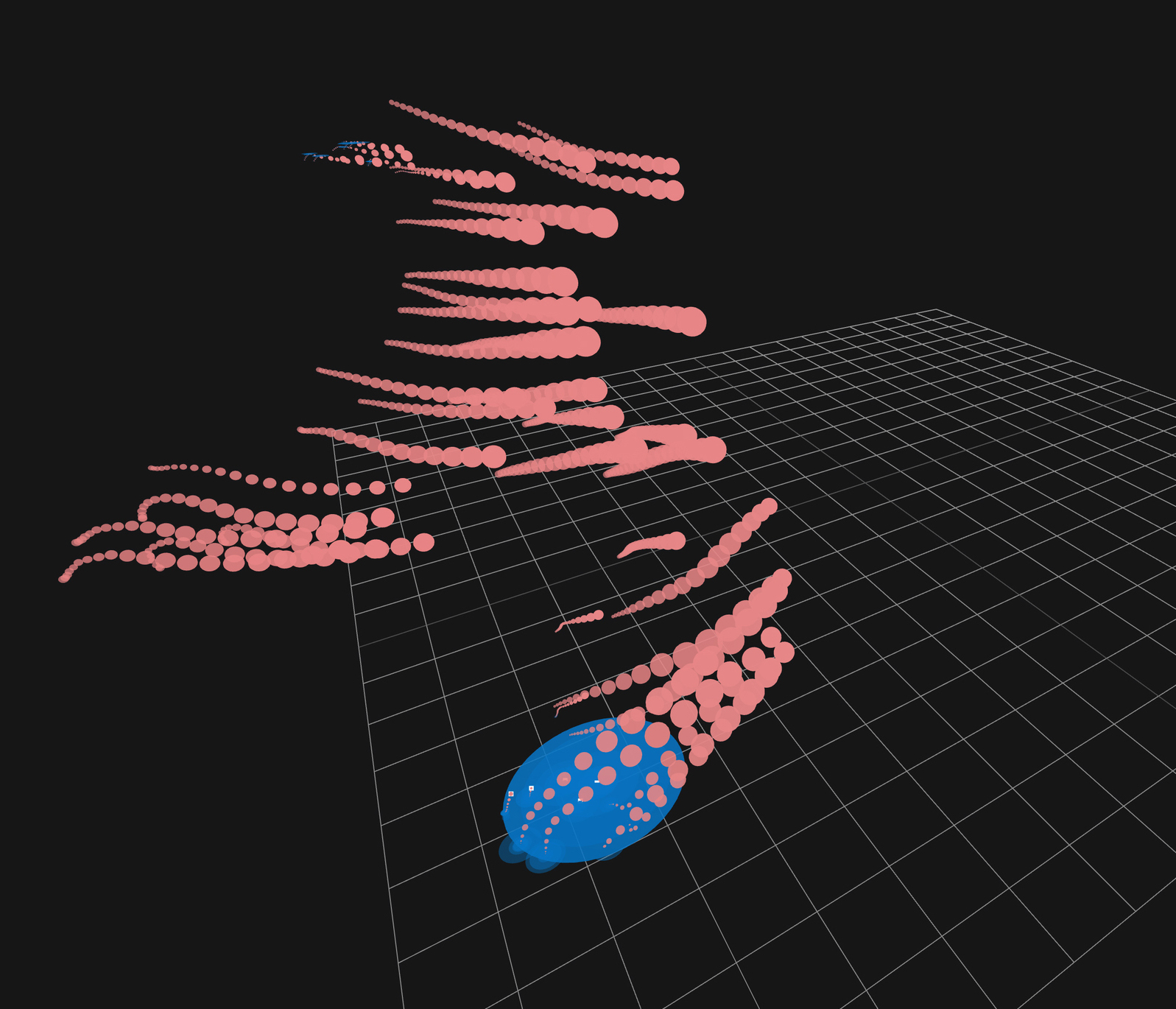

The studies of choreography and Laban Movement Analysis in the dance discipline offer systems for interpreting meaning from a person’s physical movements based on quality and context that can be generalized to establish a lexicon of detectable, expressive qualities in human movement that should inform the design of gestural interfaces, both on and off the screen. In this research, I conducted a series of pilot studies to assess the relevance of the Laban Efforts–Punch, Slash, Dab, Wring, Press, Flick, Float, and Glide–for classifying movement quality to the design of gestural interfaces.

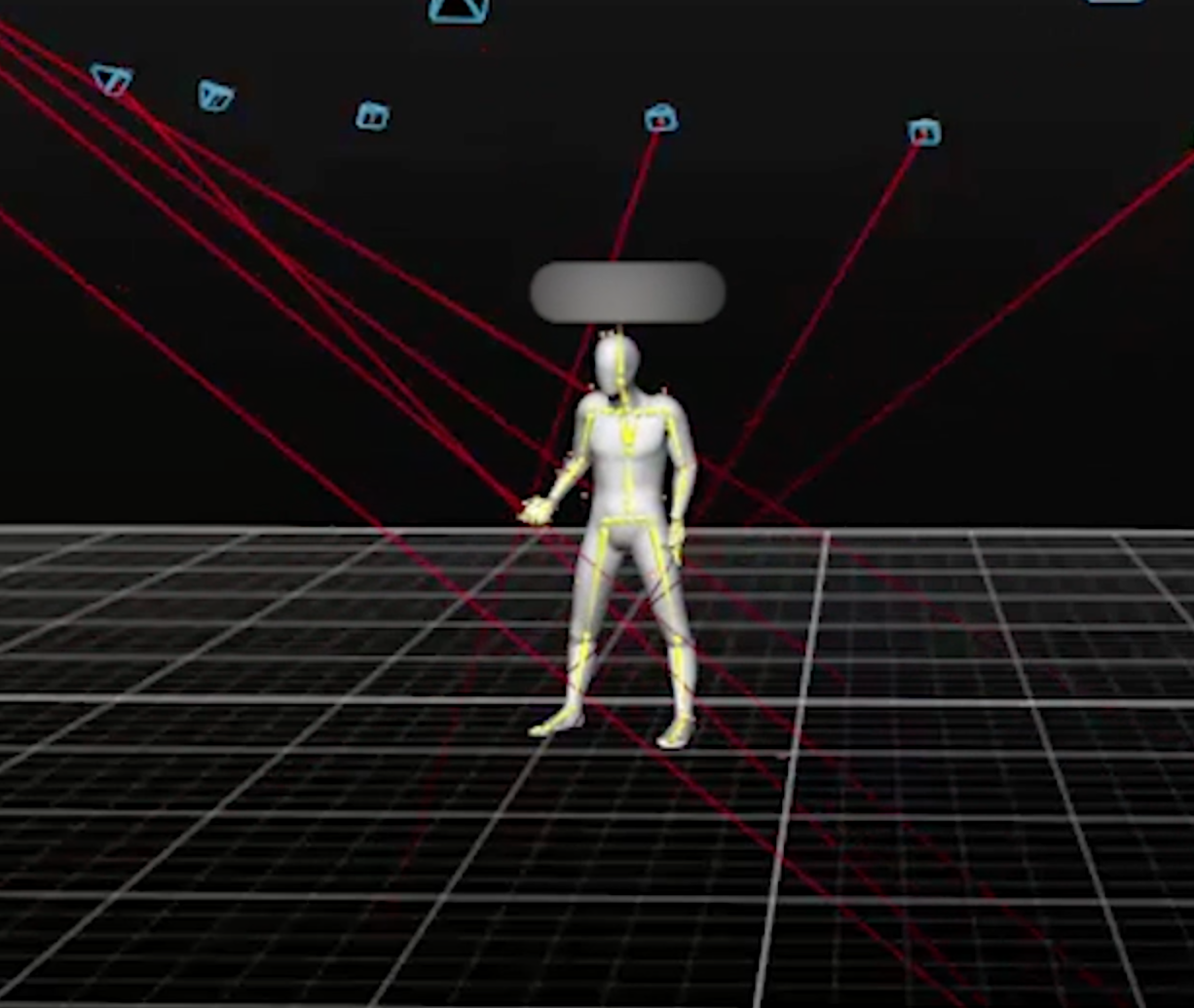

By conducting the a survey in which human, non-expert participants labelled the movements of a person intentionally performing each of the Laban Efforts with Laban Efforts and emotional interpretations, I determined that that non-expert humans can recognize a subset of the Laban Efforts with a reasonable degree of inter-rater reliability–ICC(2,k)=0.78–and validity–F1-Score=0.76. In the second study, I developed a web application with which human, non-expert participants segmented videos of other humans moving expressively and labelled the segmented gestures with Laban Efforts and emotional interpretations. I completed this research by drawing connections between several of the Laban Efforts and consistently interpreted and experienced emotional intentions. Future work will strengthen associations between the qualities and their emotional meanings to provide a framework for their use in the design of gestural interfaces.

The first of these studies was accepted into the Affect Computing and Intelligent Interaction conference in 2017. The full series of studies is detailed in the thesis below.